Tech

NVIDIA and xAI Unveil World’s Largest AI Supercomputer, Colossus, with Plans for Expansion

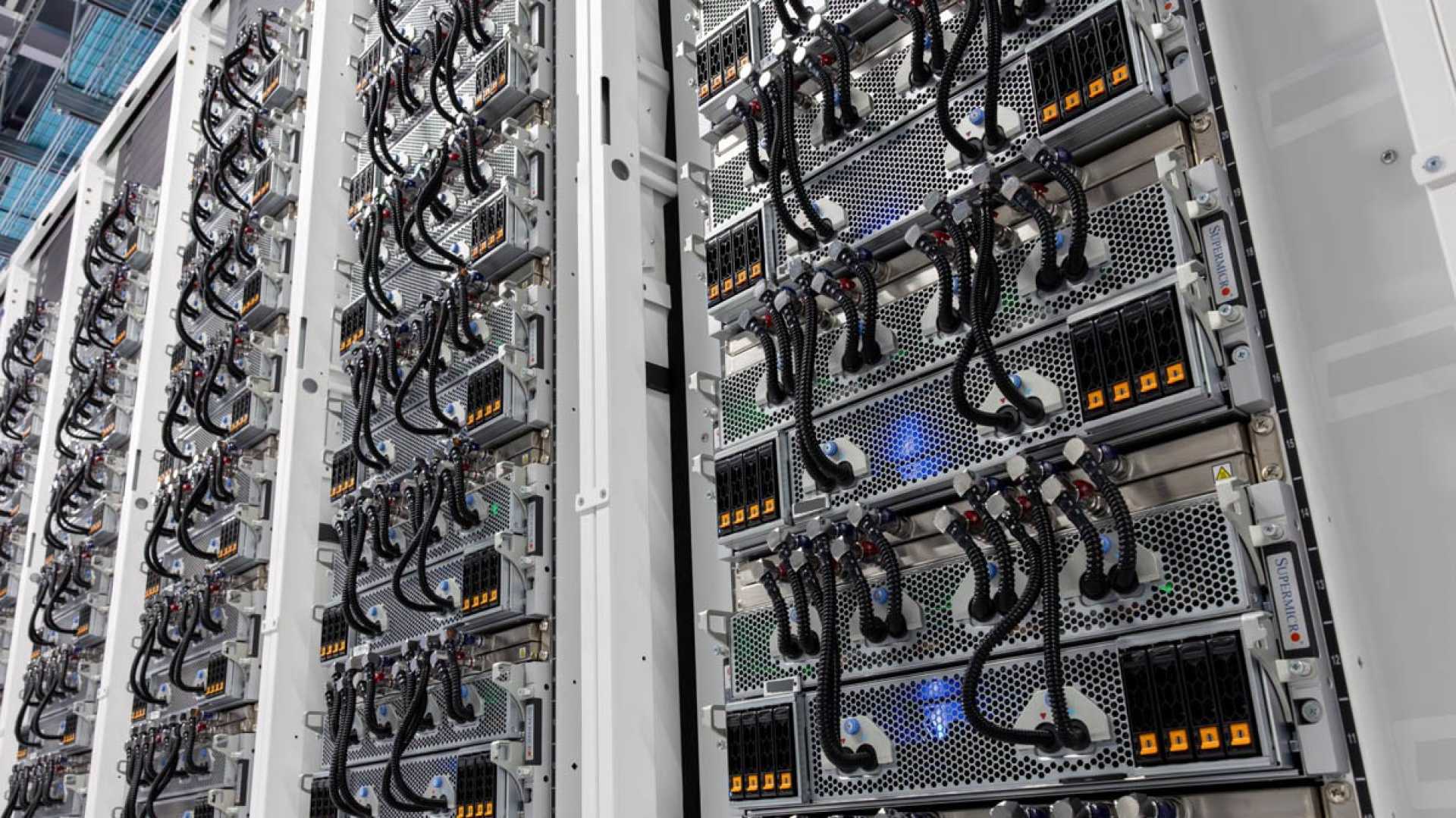

xAI, an artificial intelligence startup founded by Elon Musk, has achieved a significant milestone in the field of AI computing with the launch of Colossus, the world’s largest and most powerful AI supercomputer. Located in Memphis, Tennessee, Colossus is powered by 100,000 NVIDIA Hopper GPUs and utilizes NVIDIA’s Spectrum-X Ethernet networking platform to enhance its performance and efficiency.

The Colossus supercomputer cluster is designed to train xAI’s Grok family of large language models, including the upcoming Grok 3 model. This massive AI infrastructure is crucial for developing and deploying advanced AI solutions, with Musk emphasizing that Colossus is “the most powerful training system in the world”.

The NVIDIA Spectrum-X Ethernet networking platform plays a critical role in Colossus’s performance, providing superior network throughput and reducing latency. The system has maintained 95% data throughput and experienced zero application latency degradation or packet loss, significantly outperforming standard Ethernet networks.

Elon Musk has announced plans to double the size of the Colossus cluster to 200,000 NVIDIA GPUs, which will include the upcoming H200 GPU. This expansion is part of xAI’s strategy to rival OpenAI and accelerate the development and deployment of its AI models.

The supercomputing facility in Memphis was built in collaboration with NVIDIA, Dell, and Supermicro, and was completed in just 122 days. This rapid deployment underscores the urgency and ambition behind xAI’s AI development efforts.

In addition to its technological advancements, xAI is also in talks with investors for a funding round that would value the company at around $40 billion, according to reports by the Wall Street Journal.