Tech

Meta Expands Teen Account Protections on Instagram, Facebook, Messenger

MENLO PARK, Calif. (April 8, 2025) — Meta announced significant updates to its platforms today, introducing expanded protections for teens using Instagram, Facebook, and Messenger. Effective in the coming months, these measures aim to provide safer online environments for users under 16, reflecting rising concerns from parents about their children’s digital safety.

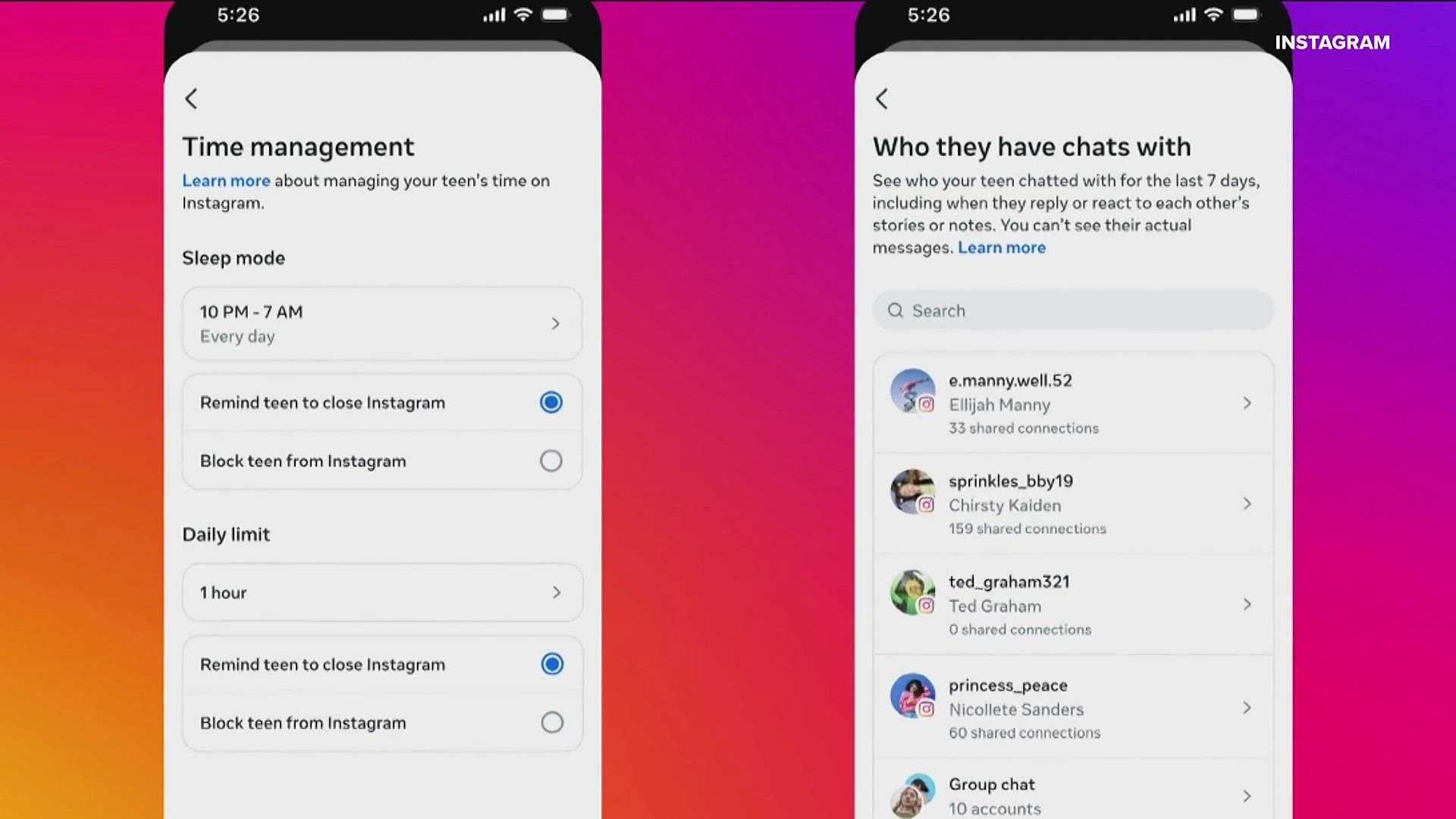

The changes include new restrictions on live streaming and direct messaging features for Instagram, which are designed to require parental approval before teens can go live or change privacy settings. “With these changes, teens under 16 will be prohibited from going Live unless their parents give them permission to do so,” Meta stated. “We will also require teens to get parental permission to turn off our feature that blurs images containing suspected nudity in DMs.” This announcement comes on the heels of growing scrutiny surrounding the impact of social media on teen mental health.

Over the last year, Meta has implemented what it calls Teen Accounts, which automatically enforce stricter privacy settings for users below the age of 16. According to company data, 97% of teens aged 13 to 15 have opted to maintain these restrictions since their introduction. The accounts ensure that these users have private profiles, receive only messages from known contacts, and that notifications are disabled overnight.

Meta’s move to extend Teen Accounts to Facebook and Messenger is part of a broader strategy to give parents more control over the content their children access. The rollout will begin in the U.S., U.K., Australia, and Canada, with plans to expand globally. “Teen Accounts on Facebook and Messenger will offer similar, automatic protections to limit inappropriate content and unwanted contact,” the company said.

These updates are seen as a response to mounting pressure from various stakeholders, including parents, lawmakers, and mental health advocates. The U.S. Surgeon General previously warned of potential mental health risks associated with social media use among adolescents, leading to increased calls for stricter regulations.

In a recent survey conducted by Meta, parents expressed that the existing Teen Accounts significantly ease their worries about online dangers. Meta articulated, “We developed Teen Accounts with parents in mind and introduced protections that were responsive to their top concerns,” highlighting the company’s commitment to address user safety.

The changes are not without controversy. Critics have raised questions about Meta’s motivations, suggesting that these updates may also serve to mitigate legal and reputational risks faced by the company. Last year, a teenager filed a $5 million lawsuit against Meta, citing the platform’s impact on mental health, a sentiment echoed by ongoing investigations from several state attorneys general.

In summary, Meta’s expansion of Teen Accounts seeks to enhance the safety of younger users across its platforms. By implementing stricter controls on live streaming and messaging, the company aims to curate a more age-appropriate experience while alleviating parental concerns. As Meta rolls out these updates, it remains to be seen whether they will suffice to rebuild trust among users and regulators alike.